Note

-

Download Jupyter notebook:

https://docs.doubleml.org/stable/examples/did/py_panel_data_example.ipynb.

Python: Real-Data Example for Multi-Period Difference-in-Differences#

In this example, we replicate a real-data demo notebook from the did-R-package in order to illustrate the use of DoubleML for multi-period difference-in-differences (DiD) models.

The notebook requires the following packages:

[1]:

import pyreadr

import pandas as pd

import numpy as np

from sklearn.linear_model import LinearRegression, LogisticRegression

from sklearn.dummy import DummyRegressor, DummyClassifier

from sklearn.linear_model import LassoCV, LogisticRegressionCV

from doubleml.data import DoubleMLPanelData

from doubleml.did import DoubleMLDIDMulti

Causal Research Question#

Callaway and Sant’Anna (2021) study the causal effect of raising the minimum wage on teen employment in the US using county data over a period from 2001 to 2007. A county is defined as treated if the minimum wage in that county is above the federal minimum wage. We focus on a preprocessed balanced panel data set as provided by the did-R-package. The corresponding documentation for the mpdta

data is available from the did package website. We use this data solely as a demonstration example to help readers understand differences in the DoubleML and did packages. An analogous notebook using the same data is available from the did documentation.

We follow the original notebook and provide results under identification based on unconditional and conditional parallel trends. For the Double Machine Learning (DML) Difference-in-Differences estimator, we demonstrate two different specifications, one based on linear and logistic regression and one based on their \(\ell_1\) penalized variants Lasso and logistic regression with cross-validated penalty choice. The results for the former are expected to be very similar to those in the did data example. Minor differences might arise due to the use of sample-splitting in the DML estimation.

Data#

We will download and read a preprocessed data file as provided by the did-R-package.

[2]:

# download file from did package for R

url = "https://github.com/bcallaway11/did/raw/refs/heads/master/data/mpdta.rda"

pyreadr.download_file(url, "mpdta.rda")

mpdta = pyreadr.read_r("mpdta.rda")["mpdta"]

mpdta.head()

[2]:

| year | countyreal | lpop | lemp | first.treat | treat | |

|---|---|---|---|---|---|---|

| 0 | 2003 | 8001.0 | 5.896761 | 8.461469 | 2007.0 | 1.0 |

| 1 | 2004 | 8001.0 | 5.896761 | 8.336870 | 2007.0 | 1.0 |

| 2 | 2005 | 8001.0 | 5.896761 | 8.340217 | 2007.0 | 1.0 |

| 3 | 2006 | 8001.0 | 5.896761 | 8.378161 | 2007.0 | 1.0 |

| 4 | 2007 | 8001.0 | 5.896761 | 8.487352 | 2007.0 | 1.0 |

To work with DoubleML, we initialize a DoubleMLPanelData object. The input data has to satisfy some requirements, i.e., it should be in a long format with every row containing the information of one unit at one time period. Moreover, the data should contain a column on the unit identifier and a column on the time period. The requirements are virtually identical to those of the

did-R-package, as listed in their data example. In line with the naming conventions of DoubleML, the treatment group indicator is passed to DoubleMLPanelData by the d_cols argument. To flexibly handle different formats for handling time periods, the time variable t_col can handle float,

int and datetime formats. More information are available in the user guide. To indicate never treated units, we set their value for the treatment group variable to np.inf.

Now, we can initialize the DoubleMLPanelData object, specifying

y_col: the outcomed_cols: the group variable indicating the first treated period for each unitid_col: the unique identification column for each unitt_col: the time columnx_cols: the additional pre-treatment controls

[3]:

# Set values for treatment group indicator for never-treated to np.inf

mpdta.loc[mpdta['first.treat'] == 0, 'first.treat'] = np.inf

dml_data = DoubleMLPanelData(

data=mpdta,

y_col="lemp",

d_cols="first.treat",

id_col="countyreal",

t_col="year",

x_cols=['lpop']

)

print(dml_data)

================== DoubleMLPanelData Object ==================

------------------ Data summary ------------------

Outcome variable: lemp

Treatment variable(s): ['first.treat']

Covariates: ['lpop']

Instrument variable(s): None

Time variable: year

Id variable: countyreal

Static panel data: False

No. Unique Ids: 500

No. Observations: 2500

------------------ DataFrame info ------------------

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 2500 entries, 0 to 2499

Columns: 6 entries, year to treat

dtypes: float64(5), int32(1)

memory usage: 107.6 KB

Note that we specified a pre-treatment confounding variable lpop through the x_cols argument. To consider cases under unconditional parallel trends, we can use dummy learners to ignore the pre-treatment confounding variable. This is illustrated below.

ATT Estimation: Unconditional Parallel Trends#

We start with identification under the unconditional parallel trends assumption. To do so, initialize a DoubleMLDIDMulti object (see model documentation), which takes the previously initialized DoubleMLPanelData object as input. We use scikit-learn’s DummyRegressor (documentation here) and

DummyClassifier (documentation here) to ignore the pre-treatment confounding variable. At this stage, we can also pass further options, for example specifying the number of folds and repetitions used for cross-fitting.

When calling the fit() method, the model estimates standard combinations of \(ATT(g,t)\) parameters, which corresponds to the defaults in the did-R-package. These combinations can also be customized through the gt_combinations argument, see the user guide.

[4]:

dml_obj = DoubleMLDIDMulti(

obj_dml_data=dml_data,

ml_g=DummyRegressor(),

ml_m=DummyClassifier(),

control_group="never_treated",

n_folds=10

)

dml_obj.fit()

print(dml_obj.summary.round(4))

coef std err t P>|t| 2.5 % 97.5 %

ATT(2004.0,2003,2004) -0.0105 0.0233 -0.4512 0.6518 -0.0562 0.0352

ATT(2004.0,2003,2005) -0.0704 0.0311 -2.2603 0.0238 -0.1314 -0.0094

ATT(2004.0,2003,2006) -0.1373 0.0367 -3.7441 0.0002 -0.2091 -0.0654

ATT(2004.0,2003,2007) -0.1008 0.0341 -2.9545 0.0031 -0.1677 -0.0339

ATT(2006.0,2003,2004) 0.0065 0.0232 0.2812 0.7786 -0.0390 0.0521

ATT(2006.0,2004,2005) -0.0027 0.0196 -0.1402 0.8885 -0.0412 0.0357

ATT(2006.0,2005,2006) -0.0046 0.0180 -0.2547 0.7990 -0.0399 0.0307

ATT(2006.0,2005,2007) -0.0412 0.0202 -2.0403 0.0413 -0.0808 -0.0016

ATT(2007.0,2003,2004) 0.0305 0.0151 2.0229 0.0431 0.0009 0.0601

ATT(2007.0,2004,2005) -0.0026 0.0165 -0.1606 0.8724 -0.0350 0.0297

ATT(2007.0,2005,2006) -0.0310 0.0180 -1.7272 0.0841 -0.0662 0.0042

ATT(2007.0,2006,2007) -0.0261 0.0167 -1.5637 0.1179 -0.0587 0.0066

The summary displays estimates of the \(ATT(g,t_\text{eval})\) effects for different combinations of \((g,t_\text{eval})\) via \(\widehat{ATT}(\mathrm{g},t_\text{pre},t_\text{eval})\), where

\(\mathrm{g}\) specifies the group

\(t_\text{pre}\) specifies the corresponding pre-treatment period

\(t_\text{eval}\) specifies the evaluation period

This corresponds to the estimates given in att_gt function in the did-R-package, where the standard choice is \(t_\text{pre} = \min(\mathrm{g}, t_\text{eval}) - 1\) (without anticipation).

Remark that this includes pre-tests effects if \(\mathrm{g} > t_{eval}\), e.g. \(ATT(2007,2005)\).

As usual for the DoubleML-package, you can obtain joint confidence intervals via bootstrap.

[5]:

level = 0.95

ci = dml_obj.confint(level=level)

dml_obj.bootstrap(n_rep_boot=5000)

ci_joint = dml_obj.confint(level=level, joint=True)

print(ci_joint)

2.5 % 97.5 %

ATT(2004.0,2003,2004) -0.076549 0.055502

ATT(2004.0,2003,2005) -0.158564 0.017771

ATT(2004.0,2003,2006) -0.241029 -0.033480

ATT(2004.0,2003,2007) -0.197369 -0.004220

ATT(2006.0,2003,2004) -0.059248 0.072315

ATT(2006.0,2004,2005) -0.058222 0.052727

ATT(2006.0,2005,2006) -0.055538 0.046371

ATT(2006.0,2005,2007) -0.098387 0.015967

ATT(2007.0,2003,2004) -0.012188 0.073218

ATT(2007.0,2004,2005) -0.049307 0.044013

ATT(2007.0,2005,2006) -0.081886 0.019828

ATT(2007.0,2006,2007) -0.073238 0.021117

A visualization of the effects can be obtained via the plot_effects() method.

Remark that the plot used joint confidence intervals per default.

[6]:

fig, ax = dml_obj.plot_effects()

/opt/hostedtoolcache/Python/3.12.12/x64/lib/python3.12/site-packages/matplotlib/cbook.py:1719: FutureWarning: Calling float on a single element Series is deprecated and will raise a TypeError in the future. Use float(ser.iloc[0]) instead

return math.isfinite(val)

Effect Aggregation#

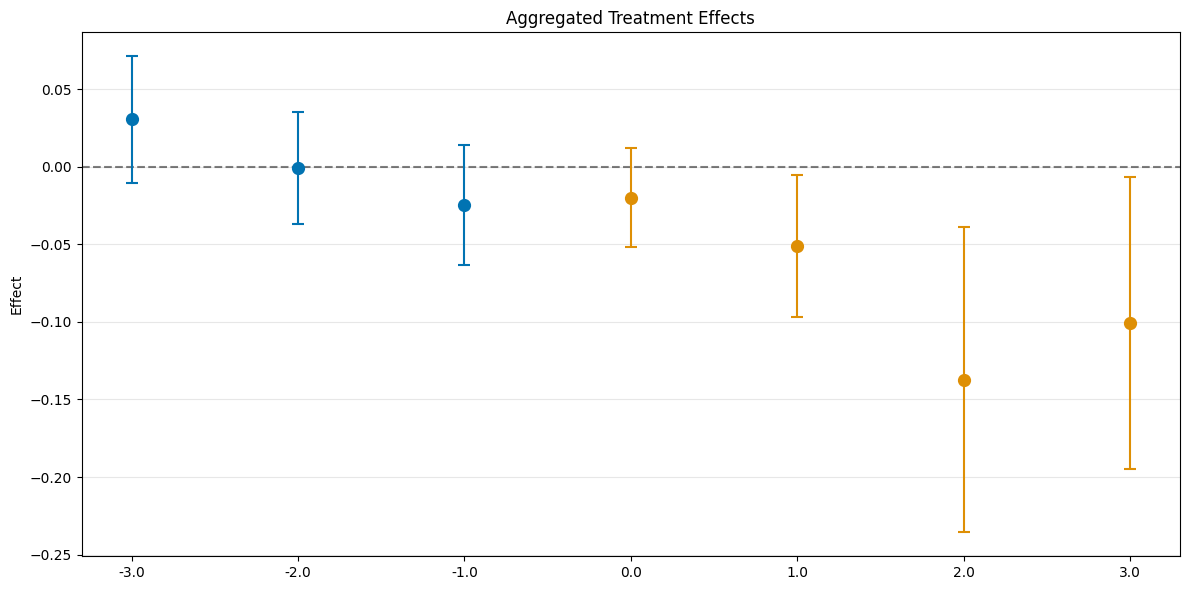

As the did-R-package, the \(ATT\)’s can be aggregated to summarize multiple effects. For details on different aggregations and details on their interpretations see Callaway and Sant’Anna(2021).

The aggregations are implemented via the aggregate() method. We follow the structure of the did package notebook and start with an aggregation relative to the treatment timing.

Event Study Aggregation#

We can aggregate the \(ATT\)s relative to the treatment timing. This is done by setting aggregation="eventstudy" in the aggregate() method. aggregation="eventstudy" aggregates \(\widehat{ATT}(\mathrm{g},t_\text{pre},t_\text{eval})\) based on exposure time \(e = t_\text{eval} - \mathrm{g}\) (respecting group size).

[7]:

# rerun bootstrap for valid simultaneous inference (as values are not saved)

dml_obj.bootstrap(n_rep_boot=5000)

aggregated_eventstudy = dml_obj.aggregate("eventstudy")

# run bootstrap to obtain simultaneous confidence intervals

aggregated_eventstudy.aggregated_frameworks.bootstrap()

print(aggregated_eventstudy)

fig, ax = aggregated_eventstudy.plot_effects()

================== DoubleMLDIDAggregation Object ==================

Event Study Aggregation

------------------ Overall Aggregated Effects ------------------

coef std err t P>|t| 2.5 % 97.5 %

-0.077231 0.01995 -3.871239 0.000108 -0.116332 -0.03813

------------------ Aggregated Effects ------------------

coef std err t P>|t| 2.5 % 97.5 %

-3.0 0.030515 0.015085 2.022874 0.043086 0.000949 0.060081

-2.0 -0.000499 0.013341 -0.037438 0.970136 -0.026647 0.025648

-1.0 -0.024414 0.014275 -1.710227 0.087224 -0.052393 0.003565

0.0 -0.019936 0.011831 -1.684987 0.091991 -0.043125 0.003253

1.0 -0.050939 0.016812 -3.029942 0.002446 -0.083889 -0.017988

2.0 -0.137254 0.036659 -3.744135 0.000181 -0.209104 -0.065405

3.0 -0.100794 0.034115 -2.954540 0.003131 -0.167659 -0.033930

------------------ Additional Information ------------------

Score function: observational

Control group: never_treated

Anticipation periods: 0

Alternatively, the \(ATT\) could also be aggregated according to (calendar) time periods or treatment groups, see the user guide.

Aggregation Details#

The DoubleMLDIDAggregation objects include several DoubleMLFrameworks which support methods like bootstrap() or confint(). Further, the weights can be accessed via the properties

overall_aggregation_weights: weights for the overall aggregationaggregation_weights: weights for the aggregation

To clarify, e.g. for the eventstudy aggregation

If one would like to consider how the aggregated effect with \(e=0\) is computed, one would have to look at the third set of weights within the aggregation_weights property

[8]:

aggregated_eventstudy.aggregation_weights[2]

[8]:

array([0. , 0. , 0. , 0. , 0. ,

0.23391813, 0. , 0. , 0. , 0. ,

0.76608187, 0. ])

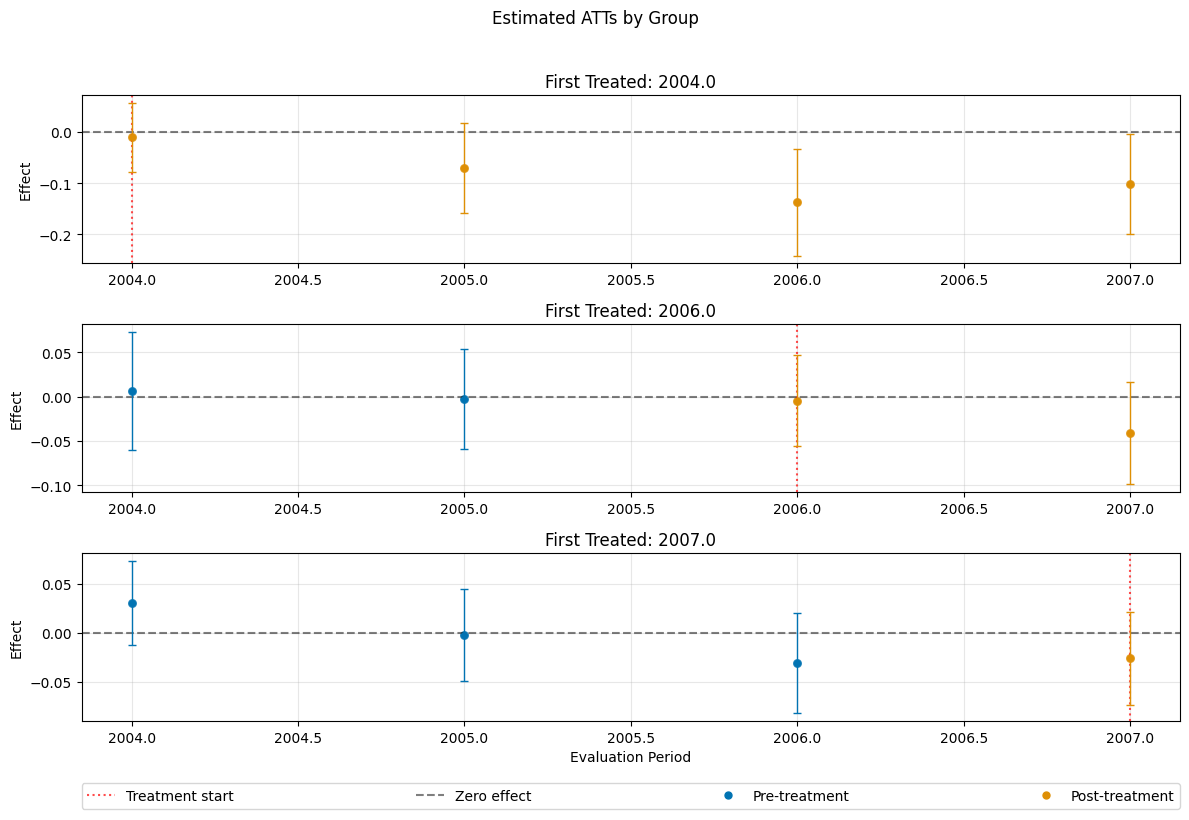

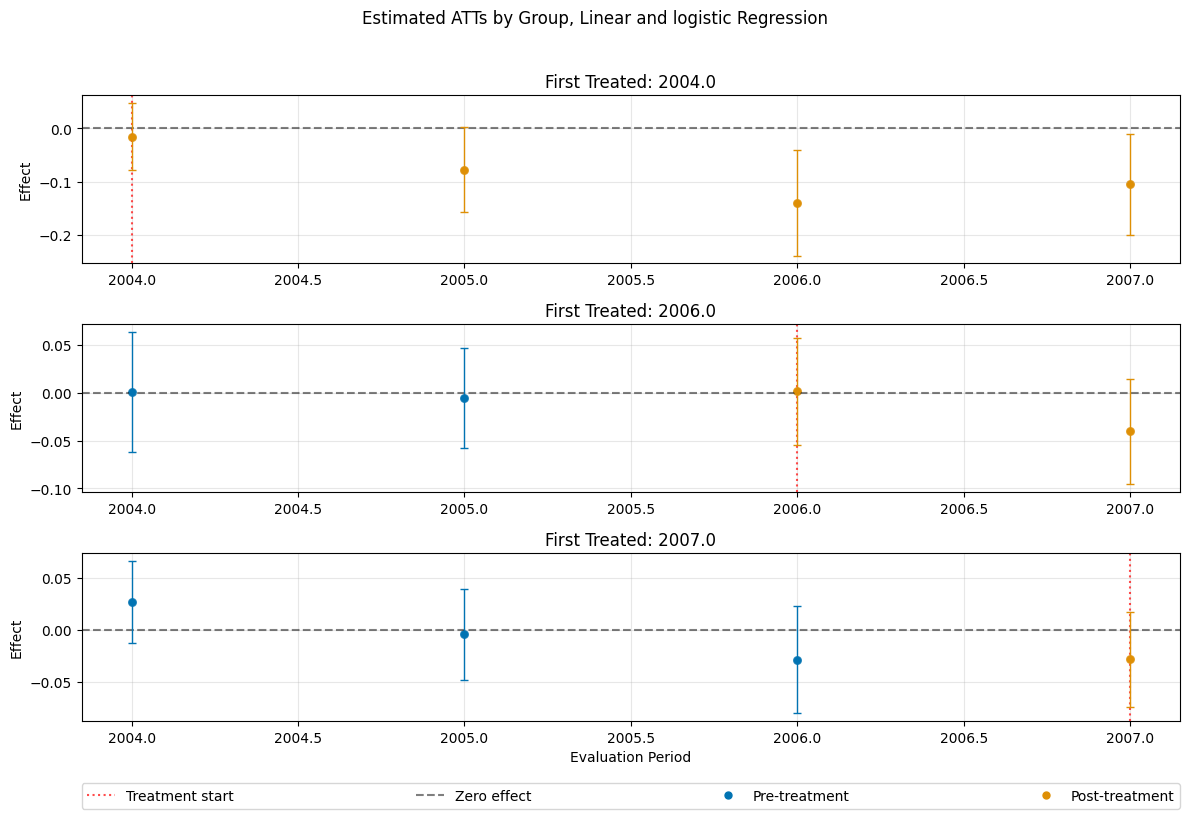

ATT Estimation: Conditional Parallel Trends#

We briefly demonstrate how to use the DoubleMLDIDMulti model with conditional parallel trends. As the rationale behind DML is to flexibly model nuisance components as prediction problems, the DML DiD estimator includes pre-treatment covariates by default. In DiD, the nuisance components are the outcome regression and the propensity score estimation for the treatment group variable. This is why we had to enforce dummy learners in the unconditional parallel trends case to ignore the

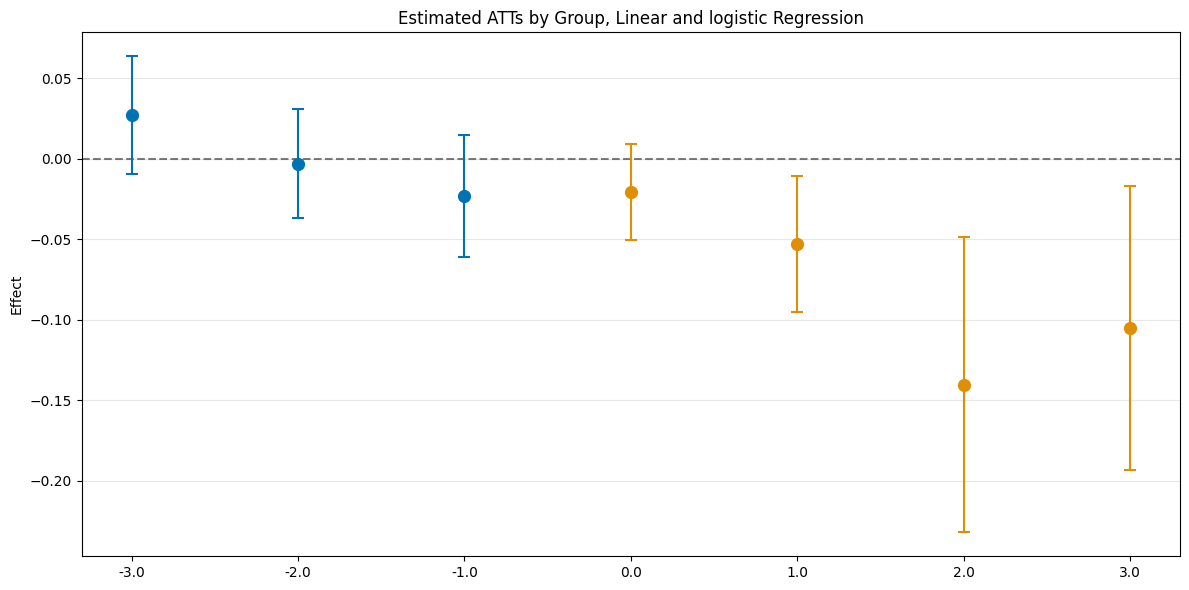

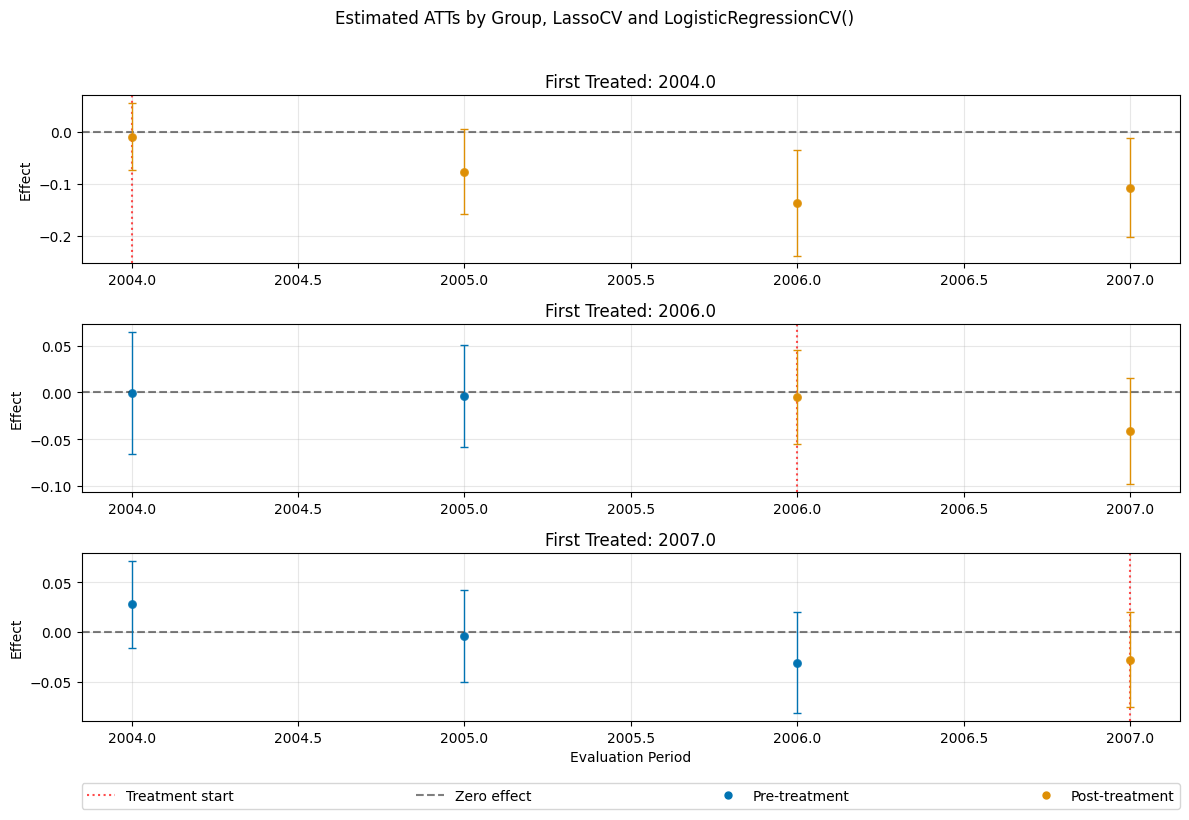

pre-treatment covariates. Now, we can replicate the classical doubly robust DiD estimator as of Callaway and Sant’Anna(2021) by using linear and logistic regression for the nuisance components. This is done by setting ml_g to LinearRegression() and ml_m to LogisticRegression(). Similarly, we can also choose other learners, for example by setting ml_g and ml_m to LassoCV() and LogisticRegressionCV(). We present

the results for the ATTs and their event-study aggregation in the corresponding effect plots.

Please note that the example is meant to illustrate the usage of the DoubleMLDIDMulti model in combination with ML learners. In real-data applicatoins, careful choice and empirical evaluation of the learners are required. Default measures for the prediction of the nuisance components are printed in the model summary, as briefly illustrated below.

[9]:

dml_obj_linear_logistic = DoubleMLDIDMulti(

obj_dml_data=dml_data,

ml_g=LinearRegression(),

ml_m=LogisticRegression(penalty=None),

control_group="never_treated",

n_folds=10

)

dml_obj_linear_logistic.fit()

dml_obj_linear_logistic.bootstrap(n_rep_boot=5000)

dml_obj_linear_logistic.plot_effects(title="Estimated ATTs by Group, Linear and logistic Regression")

/opt/hostedtoolcache/Python/3.12.12/x64/lib/python3.12/site-packages/matplotlib/cbook.py:1719: FutureWarning: Calling float on a single element Series is deprecated and will raise a TypeError in the future. Use float(ser.iloc[0]) instead

return math.isfinite(val)

[9]:

(<Figure size 1200x800 with 4 Axes>,

[<Axes: title={'center': 'First Treated: 2004.0'}, ylabel='Effect'>,

<Axes: title={'center': 'First Treated: 2006.0'}, ylabel='Effect'>,

<Axes: title={'center': 'First Treated: 2007.0'}, xlabel='Evaluation Period', ylabel='Effect'>])

We briefly look at the model summary, which includes some standard diagnostics for the prediction of the nuisance components.

[10]:

print(dml_obj_linear_logistic)

================== DoubleMLDIDMulti Object ==================

------------------ Data summary ------------------

Outcome variable: lemp

Treatment variable(s): ['first.treat']

Covariates: ['lpop']

Instrument variable(s): None

Time variable: year

Id variable: countyreal

Static panel data: False

No. Unique Ids: 500

No. Observations: 2500

------------------ Score & algorithm ------------------

Score function: observational

Control group: never_treated

Anticipation periods: 0

------------------ Machine learner ------------------

Learner ml_g: LinearRegression()

Learner ml_m: LogisticRegression(penalty=None)

Out-of-sample Performance:

Regression:

Learner ml_g0 RMSE: [[0.17260321 0.18165055 0.25781839 0.2579003 0.17204827 0.15135118

0.20146379 0.20565979 0.1728368 0.15160537 0.20106681 0.16421457]]

Learner ml_g1 RMSE: [[0.10389687 0.13031684 0.14125079 0.14934119 0.14079369 0.11361402

0.08768186 0.10853587 0.13343232 0.16091219 0.15914093 0.16105204]]

Classification:

Learner ml_m Log Loss: [[0.229904 0.2306338 0.23168941 0.23093647 0.3485328 0.34770933

0.34987408 0.3501404 0.60761495 0.6072282 0.60603416 0.60728822]]

------------------ Resampling ------------------

No. folds: 10

No. repeated sample splits: 1

------------------ Fit summary ------------------

coef std err t P>|t| 2.5 % \

ATT(2004.0,2003,2004) -0.015547 0.021985 -0.707139 0.479480 -0.058637

ATT(2004.0,2003,2005) -0.074930 0.028414 -2.637092 0.008362 -0.130620

ATT(2004.0,2003,2006) -0.137738 0.035098 -3.924319 0.000087 -0.206529

ATT(2004.0,2003,2007) -0.105005 0.032403 -3.240592 0.001193 -0.168514

ATT(2006.0,2003,2004) 0.000056 0.022314 0.002503 0.998003 -0.043679

ATT(2006.0,2004,2005) -0.005934 0.018236 -0.325381 0.744893 -0.041677

ATT(2006.0,2005,2006) -0.000843 0.019336 -0.043586 0.965234 -0.038741

ATT(2006.0,2005,2007) -0.039795 0.019787 -2.011139 0.044311 -0.078578

ATT(2007.0,2003,2004) 0.026739 0.014222 1.880138 0.060089 -0.001135

ATT(2007.0,2004,2005) -0.003662 0.015705 -0.233194 0.815611 -0.034443

ATT(2007.0,2005,2006) -0.029965 0.018215 -1.645100 0.099949 -0.065665

ATT(2007.0,2006,2007) -0.030489 0.016292 -1.871392 0.061291 -0.062421

97.5 %

ATT(2004.0,2003,2004) 0.027544

ATT(2004.0,2003,2005) -0.019240

ATT(2004.0,2003,2006) -0.068946

ATT(2004.0,2003,2007) -0.041496

ATT(2006.0,2003,2004) 0.043791

ATT(2006.0,2004,2005) 0.029809

ATT(2006.0,2005,2006) 0.037055

ATT(2006.0,2005,2007) -0.001013

ATT(2007.0,2003,2004) 0.054613

ATT(2007.0,2004,2005) 0.027118

ATT(2007.0,2005,2006) 0.005735

ATT(2007.0,2006,2007) 0.001443

[11]:

es_linear_logistic = dml_obj_linear_logistic.aggregate("eventstudy")

es_linear_logistic.aggregated_frameworks.bootstrap()

es_linear_logistic.plot_effects(title="Estimated ATTs by Group, Linear and logistic Regression")

[11]:

(<Figure size 1200x600 with 1 Axes>,

<Axes: title={'center': 'Estimated ATTs by Group, Linear and logistic Regression'}, ylabel='Effect'>)

[12]:

dml_obj_lasso = DoubleMLDIDMulti(

obj_dml_data=dml_data,

ml_g=LassoCV(),

ml_m=LogisticRegressionCV(),

control_group="never_treated",

n_folds=10

)

dml_obj_lasso.fit()

dml_obj_lasso.bootstrap(n_rep_boot=5000)

dml_obj_lasso.plot_effects(title="Estimated ATTs by Group, LassoCV and LogisticRegressionCV()")

/opt/hostedtoolcache/Python/3.12.12/x64/lib/python3.12/site-packages/matplotlib/cbook.py:1719: FutureWarning: Calling float on a single element Series is deprecated and will raise a TypeError in the future. Use float(ser.iloc[0]) instead

return math.isfinite(val)

[12]:

(<Figure size 1200x800 with 4 Axes>,

[<Axes: title={'center': 'First Treated: 2004.0'}, ylabel='Effect'>,

<Axes: title={'center': 'First Treated: 2006.0'}, ylabel='Effect'>,

<Axes: title={'center': 'First Treated: 2007.0'}, xlabel='Evaluation Period', ylabel='Effect'>])

[13]:

# Model summary

print(dml_obj_lasso)

================== DoubleMLDIDMulti Object ==================

------------------ Data summary ------------------

Outcome variable: lemp

Treatment variable(s): ['first.treat']

Covariates: ['lpop']

Instrument variable(s): None

Time variable: year

Id variable: countyreal

Static panel data: False

No. Unique Ids: 500

No. Observations: 2500

------------------ Score & algorithm ------------------

Score function: observational

Control group: never_treated

Anticipation periods: 0

------------------ Machine learner ------------------

Learner ml_g: LassoCV()

Learner ml_m: LogisticRegressionCV()

Out-of-sample Performance:

Regression:

Learner ml_g0 RMSE: [[0.17284165 0.18190243 0.25859066 0.26000651 0.17205776 0.15144562

0.20076945 0.20571705 0.17291711 0.15151993 0.20081731 0.16431912]]

Learner ml_g1 RMSE: [[0.10078013 0.12785298 0.14194819 0.15158475 0.14098199 0.11510938

0.08880396 0.1063245 0.13345029 0.16117911 0.1591569 0.15905967]]

Classification:

Learner ml_m Log Loss: [[0.2291382 0.22913816 0.2291371 0.22914071 0.35596076 0.35597244

0.35596086 0.35596503 0.60885735 0.60884642 0.60884671 0.60883713]]

------------------ Resampling ------------------

No. folds: 10

No. repeated sample splits: 1

------------------ Fit summary ------------------

coef std err t P>|t| 2.5 % \

ATT(2004.0,2003,2004) -0.012871 0.022821 -0.564019 0.572741 -0.057600

ATT(2004.0,2003,2005) -0.077390 0.029072 -2.662002 0.007768 -0.134370

ATT(2004.0,2003,2006) -0.139637 0.035430 -3.941177 0.000081 -0.209080

ATT(2004.0,2003,2007) -0.107617 0.034060 -3.159607 0.001580 -0.174373

ATT(2006.0,2003,2004) 0.001022 0.023290 0.043890 0.964992 -0.044625

ATT(2006.0,2004,2005) -0.005360 0.019453 -0.275550 0.782894 -0.043487

ATT(2006.0,2005,2006) -0.004383 0.017918 -0.244623 0.806749 -0.039503

ATT(2006.0,2005,2007) -0.041191 0.020280 -2.031096 0.042245 -0.080940

ATT(2007.0,2003,2004) 0.027307 0.015194 1.797228 0.072299 -0.002473

ATT(2007.0,2004,2005) -0.003569 0.016336 -0.218477 0.827057 -0.035586

ATT(2007.0,2005,2006) -0.030981 0.017936 -1.727321 0.084110 -0.066135

ATT(2007.0,2006,2007) -0.026967 0.016746 -1.610394 0.107312 -0.059789

97.5 %

ATT(2004.0,2003,2004) 0.031857

ATT(2004.0,2003,2005) -0.020410

ATT(2004.0,2003,2006) -0.070195

ATT(2004.0,2003,2007) -0.040860

ATT(2006.0,2003,2004) 0.046670

ATT(2006.0,2004,2005) 0.032767

ATT(2006.0,2005,2006) 0.030736

ATT(2006.0,2005,2007) -0.001443

ATT(2007.0,2003,2004) 0.057086

ATT(2007.0,2004,2005) 0.028448

ATT(2007.0,2005,2006) 0.004173

ATT(2007.0,2006,2007) 0.005854

[14]:

es_rf = dml_obj_lasso.aggregate("eventstudy")

es_rf.aggregated_frameworks.bootstrap()

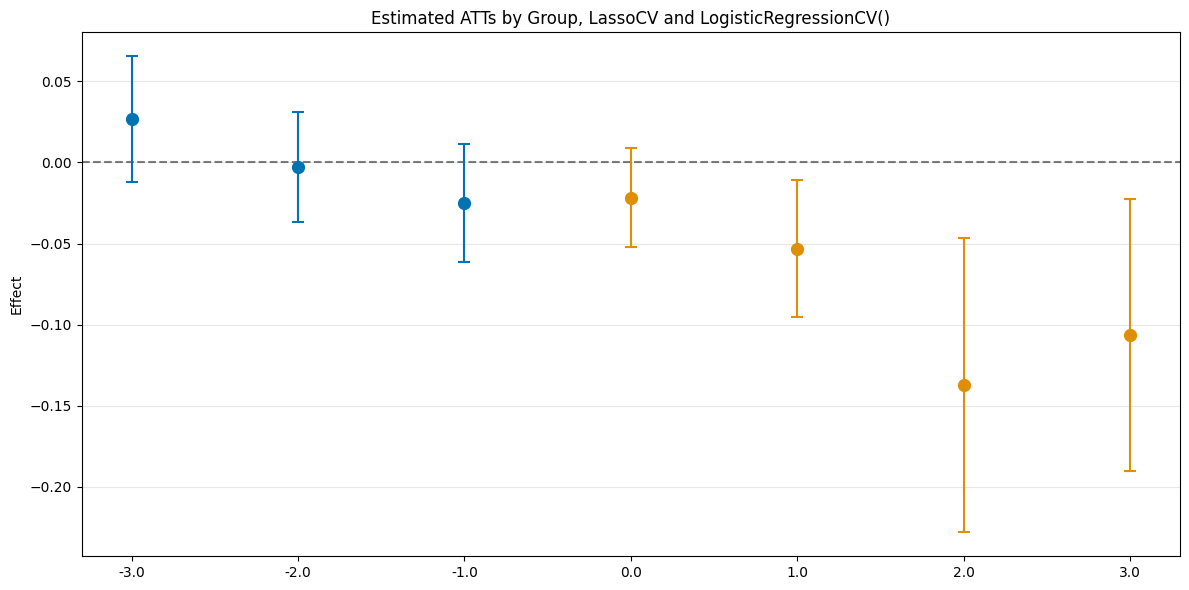

es_rf.plot_effects(title="Estimated ATTs by Group, LassoCV and LogisticRegressionCV()")

[14]:

(<Figure size 1200x600 with 1 Axes>,

<Axes: title={'center': 'Estimated ATTs by Group, LassoCV and LogisticRegressionCV()'}, ylabel='Effect'>)