Note

-

Download Jupyter notebook:

https://docs.doubleml.org/stable/examples/did/py_panel_simple.ipynb.

Python: Panel Data Introduction#

In this example, we replicate the results from the guide Getting Started with the did Package of the did-R-package.

As the did-R-package the implementation of DoubleML is based on Callaway and Sant’Anna(2021).

The notebook requires the following packages:

[1]:

import pandas as pd

import numpy as np

from sklearn.linear_model import LinearRegression, LogisticRegression

from doubleml.data import DoubleMLPanelData

from doubleml.did import DoubleMLDIDMulti

Data#

The data we will use is simulated and part of the CSDID-Python-Package.

A description of the data generating process can be found at the CSDID-documentation.

[2]:

dta = pd.read_csv("https://raw.githubusercontent.com/d2cml-ai/csdid/main/data/sim_data.csv")

dta.head()

[2]:

| G | X | id | cluster | period | Y | treat | |

|---|---|---|---|---|---|---|---|

| 0 | 3 | -0.876233 | 1 | 5 | 1 | 5.562556 | 1 |

| 1 | 3 | -0.876233 | 1 | 5 | 2 | 4.349213 | 1 |

| 2 | 3 | -0.876233 | 1 | 5 | 3 | 7.134037 | 1 |

| 3 | 3 | -0.876233 | 1 | 5 | 4 | 6.243056 | 1 |

| 4 | 2 | -0.873848 | 2 | 36 | 1 | -3.659387 | 1 |

To work with the DoubleML-package, we initialize a DoubleMLPanelData object.

Therefore, we set the never-treated units in group column G to np.inf (we have to change the datatype to float).

[3]:

# set dtype for G to float

dta["G"] = dta["G"].astype(float)

dta.loc[dta["G"] == 0, "G"] = np.inf

dta.head()

[3]:

| G | X | id | cluster | period | Y | treat | |

|---|---|---|---|---|---|---|---|

| 0 | 3.0 | -0.876233 | 1 | 5 | 1 | 5.562556 | 1 |

| 1 | 3.0 | -0.876233 | 1 | 5 | 2 | 4.349213 | 1 |

| 2 | 3.0 | -0.876233 | 1 | 5 | 3 | 7.134037 | 1 |

| 3 | 3.0 | -0.876233 | 1 | 5 | 4 | 6.243056 | 1 |

| 4 | 2.0 | -0.873848 | 2 | 36 | 1 | -3.659387 | 1 |

Now, we can initialize the DoubleMLPanelData object, specifying

y_col: the outcomed_cols: the group variable indicating the first treated period for each unitid_col: the unique identification column for each unitt_col: the time columnx_cols: the additional pre-treatment controls

[4]:

dml_data = DoubleMLPanelData(

data=dta,

y_col="Y",

d_cols="G",

id_col="id",

t_col="period",

x_cols=["X"]

)

print(dml_data)

================== DoubleMLPanelData Object ==================

------------------ Data summary ------------------

Outcome variable: Y

Treatment variable(s): ['G']

Covariates: ['X']

Instrument variable(s): None

Time variable: period

Id variable: id

Static panel data: False

No. Unique Ids: 3979

No. Observations: 15916

------------------ DataFrame info ------------------

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 15916 entries, 0 to 15915

Columns: 7 entries, G to treat

dtypes: float64(3), int64(4)

memory usage: 870.5 KB

ATT Estimation#

The DoubleML-package implements estimation of group-time average treatment effect via the DoubleMLDIDMulti class (see model documentation).

The class basically behaves like other DoubleML classes and requires the specification of two learners (for more details on the regression elements, see score documentation). The model will be estimated using the fit() method.

[5]:

dml_obj = DoubleMLDIDMulti(

obj_dml_data=dml_data,

ml_g=LinearRegression(),

ml_m=LogisticRegression(),

control_group="never_treated",

)

dml_obj.fit()

print(dml_obj)

================== DoubleMLDIDMulti Object ==================

------------------ Data summary ------------------

Outcome variable: Y

Treatment variable(s): ['G']

Covariates: ['X']

Instrument variable(s): None

Time variable: period

Id variable: id

Static panel data: False

No. Unique Ids: 3979

No. Observations: 15916

------------------ Score & algorithm ------------------

Score function: observational

Control group: never_treated

Anticipation periods: 0

------------------ Machine learner ------------------

Learner ml_g: LinearRegression()

Learner ml_m: LogisticRegression()

Out-of-sample Performance:

Regression:

Learner ml_g0 RMSE: [[1.42676563 1.41265085 1.39988763 1.42668917 1.40352724 1.42262065

1.42518063 1.40465121 1.42308983]]

Learner ml_g1 RMSE: [[1.4039586 1.43903595 1.3967635 1.41346325 1.4250619 1.38352699

1.46052081 1.41822744 1.40728579]]

Classification:

Learner ml_m Log Loss: [[0.6911068 0.69084952 0.69085175 0.68030933 0.67987042 0.67915492

0.66242556 0.6625067 0.66212594]]

------------------ Resampling ------------------

No. folds: 5

No. repeated sample splits: 1

------------------ Fit summary ------------------

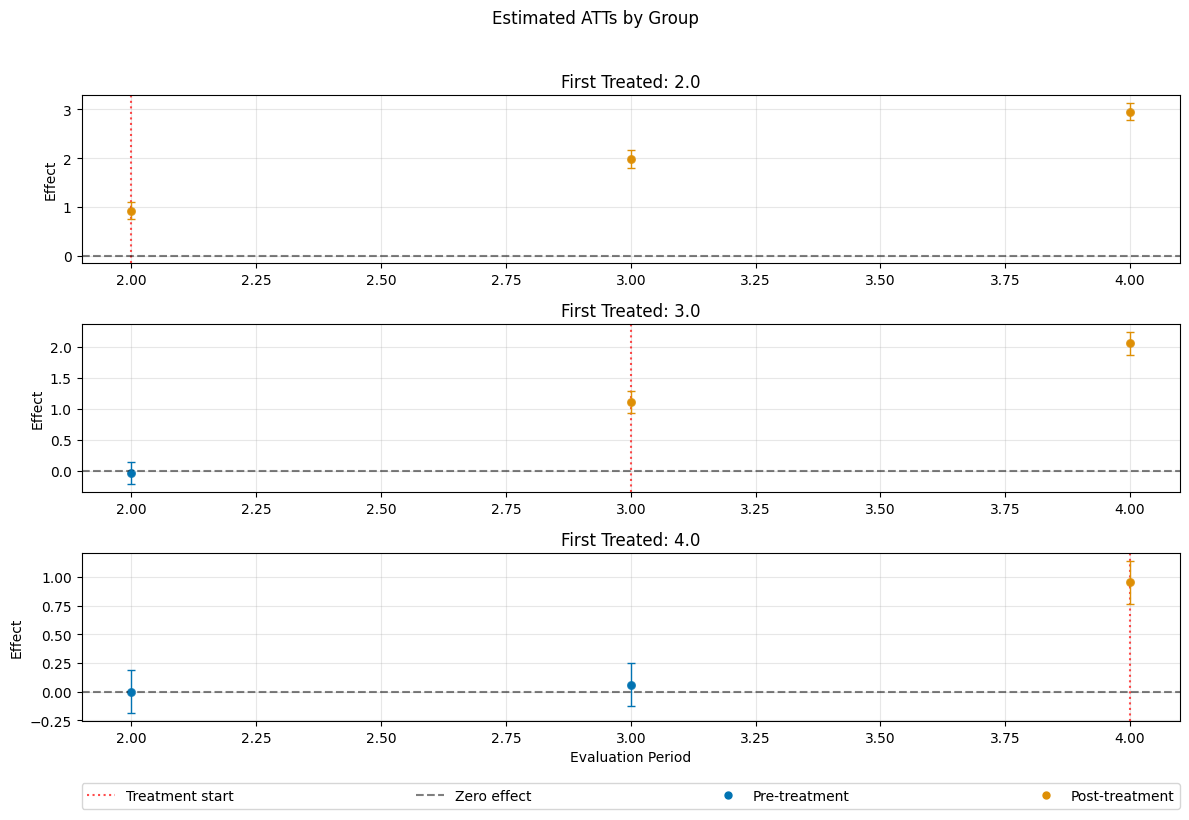

coef std err t P>|t| 2.5 % 97.5 %

ATT(2.0,1,2) 0.925458 0.064120 14.433221 0.000000 0.799785 1.051131

ATT(2.0,1,3) 1.989203 0.064699 30.745484 0.000000 1.862395 2.116011

ATT(2.0,1,4) 2.951405 0.063280 46.640434 0.000000 2.827378 3.075431

ATT(3.0,1,2) -0.034680 0.066031 -0.525214 0.599434 -0.164099 0.094738

ATT(3.0,2,3) 1.113239 0.065350 17.035002 0.000000 0.985155 1.241323

ATT(3.0,2,4) 2.055487 0.065477 31.392537 0.000000 1.927155 2.183820

ATT(4.0,1,2) 0.002416 0.068377 0.035340 0.971809 -0.131599 0.136432

ATT(4.0,2,3) 0.064144 0.066283 0.967732 0.333178 -0.065768 0.194057

ATT(4.0,3,4) 0.954969 0.067381 14.172688 0.000000 0.822905 1.087033

The summary displays estimates of the \(ATT(g,t_\text{eval})\) effects for different combinations of \((g,t_\text{eval})\) via \(\widehat{ATT}(\mathrm{g},t_\text{pre},t_\text{eval})\), where

\(\mathrm{g}\) specifies the group

\(t_\text{pre}\) specifies the corresponding pre-treatment period

\(t_\text{eval}\) specifies the evaluation period

This corresponds to the estimates given in att_gt function in the did-R-package, where the standard choice is \(t_\text{pre} = \min(\mathrm{g}, t_\text{eval}) - 1\) (without anticipation).

Remark that this includes pre-tests effects if \(\mathrm{g} > t_{eval}\), e.g. \(ATT(4,2)\).

As usual for the DoubleML-package, you can obtain joint confidence intervals via bootstrap.

[6]:

level = 0.95

ci = dml_obj.confint(level=level)

dml_obj.bootstrap(n_rep_boot=5000)

ci_joint = dml_obj.confint(level=level, joint=True)

ci_joint

[6]:

| 2.5 % | 97.5 % | |

|---|---|---|

| ATT(2.0,1,2) | 0.752447 | 1.098469 |

| ATT(2.0,1,3) | 1.814629 | 2.163777 |

| ATT(2.0,1,4) | 2.780660 | 3.122150 |

| ATT(3.0,1,2) | -0.212848 | 0.143487 |

| ATT(3.0,2,3) | 0.936909 | 1.289570 |

| ATT(3.0,2,4) | 1.878815 | 2.232160 |

| ATT(4.0,1,2) | -0.182080 | 0.186913 |

| ATT(4.0,2,3) | -0.114704 | 0.242993 |

| ATT(4.0,3,4) | 0.773159 | 1.136779 |

A visualization of the effects can be obtained via the plot_effects() method.

Remark that the plot used joint confidence intervals per default.

[7]:

fig, ax = dml_obj.plot_effects()

/opt/hostedtoolcache/Python/3.12.12/x64/lib/python3.12/site-packages/matplotlib/cbook.py:1719: FutureWarning: Calling float on a single element Series is deprecated and will raise a TypeError in the future. Use float(ser.iloc[0]) instead

return math.isfinite(val)

/opt/hostedtoolcache/Python/3.12.12/x64/lib/python3.12/site-packages/matplotlib/cbook.py:1719: FutureWarning: Calling float on a single element Series is deprecated and will raise a TypeError in the future. Use float(ser.iloc[0]) instead

return math.isfinite(val)

Effect Aggregation#

As the did-R-package, the \(ATT\)’s can be aggregated to summarize multiple effects. For details on different aggregations and details on their interpretations see Callaway and Sant’Anna(2021).

The aggregations are implemented via the aggregate() method.

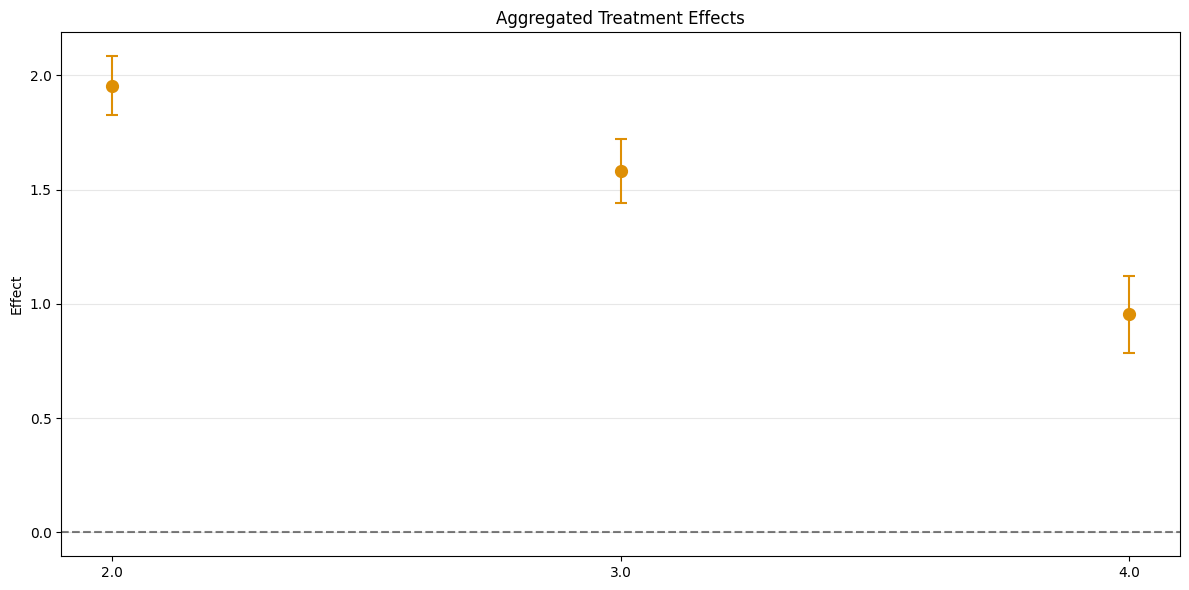

Group Aggregation#

To obtain group-specific effects it is possible to aggregate several \(\widehat{ATT}(\mathrm{g},t_\text{pre},t_\text{eval})\) values based on the group \(\mathrm{g}\) by setting the aggregation="group" argument.

[8]:

aggregated = dml_obj.aggregate(aggregation="group")

print(aggregated)

_ = aggregated.plot_effects()

================== DoubleMLDIDAggregation Object ==================

Group Aggregation

------------------ Overall Aggregated Effects ------------------

coef std err t P>|t| 2.5 % 97.5 %

1.489499 0.03422 43.527268 0.0 1.42243 1.556569

------------------ Aggregated Effects ------------------

coef std err t P>|t| 2.5 % 97.5 %

2.0 1.955355 0.052304 37.384474 0.0 1.852841 2.057869

3.0 1.584363 0.056252 28.165289 0.0 1.474111 1.694616

4.0 0.954969 0.067381 14.172688 0.0 0.822905 1.087033

------------------ Additional Information ------------------

Score function: observational

Control group: never_treated

Anticipation periods: 0

/home/runner/work/doubleml-docs/doubleml-docs/doubleml-for-py/doubleml/did/did_aggregation.py:368: UserWarning: Joint confidence intervals require bootstrapping which hasn't been performed yet. Automatically applying '.aggregated_frameworks.bootstrap(method="normal", n_rep_boot=500)' with default values. For different bootstrap settings, call bootstrap() explicitly before plotting.

warnings.warn(

The output is a DoubleMLDIDAggregation object which includes an overall aggregation summary based on group size.

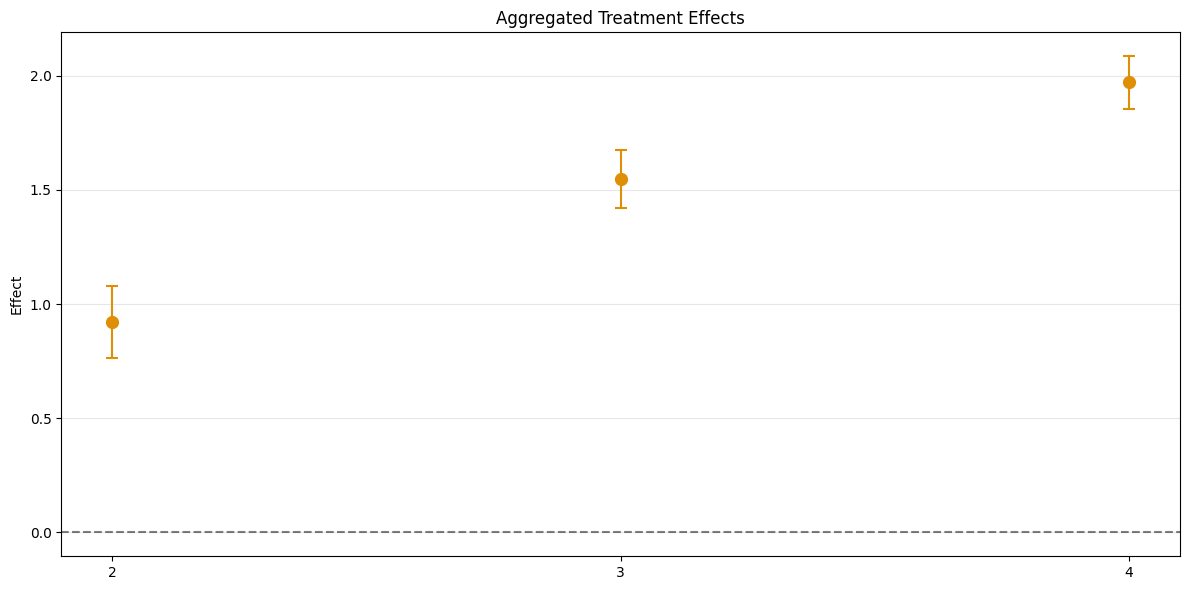

Time Aggregation#

This aggregates \(\widehat{ATT}(\mathrm{g},t_\text{pre},t_\text{eval})\), based on \(t_\text{eval}\), but weighted with respect to group size. Corresponds to Calendar Time Effects from the did-R-package.

For calendar time effects set aggregation="time".

[9]:

aggregated_time = dml_obj.aggregate("time")

print(aggregated_time)

fig, ax = aggregated_time.plot_effects()

================== DoubleMLDIDAggregation Object ==================

Time Aggregation

------------------ Overall Aggregated Effects ------------------

coef std err t P>|t| 2.5 % 97.5 %

1.482971 0.035127 42.217006 0.0 1.414123 1.551819

------------------ Aggregated Effects ------------------

coef std err t P>|t| 2.5 % 97.5 %

2 0.925458 0.064120 14.433221 0.0 0.799785 1.051131

3 1.552565 0.051328 30.248125 0.0 1.451964 1.653165

4 1.970890 0.046586 42.306230 0.0 1.879583 2.062198

------------------ Additional Information ------------------

Score function: observational

Control group: never_treated

Anticipation periods: 0

/home/runner/work/doubleml-docs/doubleml-docs/doubleml-for-py/doubleml/did/did_aggregation.py:368: UserWarning: Joint confidence intervals require bootstrapping which hasn't been performed yet. Automatically applying '.aggregated_frameworks.bootstrap(method="normal", n_rep_boot=500)' with default values. For different bootstrap settings, call bootstrap() explicitly before plotting.

warnings.warn(

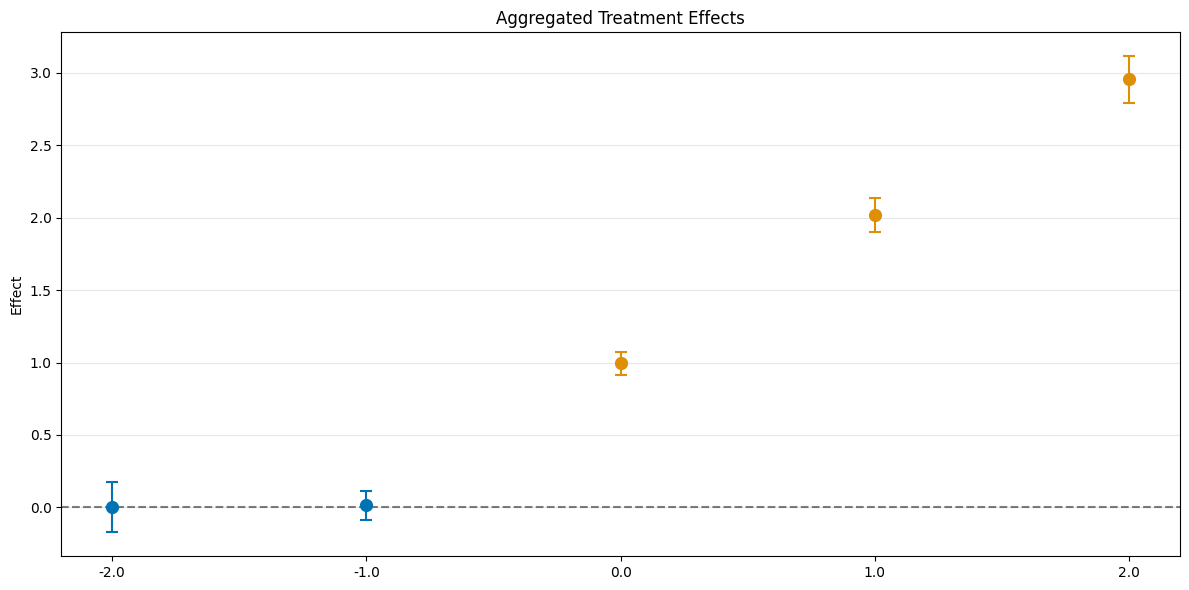

Event Study Aggregation#

Finally, aggregation="eventstudy" aggregates \(\widehat{ATT}(\mathrm{g},t_\text{pre},t_\text{eval})\) based on exposure time \(e = t_\text{eval} - \mathrm{g}\) (respecting group size).

[10]:

aggregated_eventstudy = dml_obj.aggregate("eventstudy")

print(aggregated_eventstudy)

fig, ax = aggregated_eventstudy.plot_effects()

================== DoubleMLDIDAggregation Object ==================

Event Study Aggregation

------------------ Overall Aggregated Effects ------------------

coef std err t P>|t| 2.5 % 97.5 %

1.99021 0.038759 51.347936 0.0 1.914243 2.066177

------------------ Aggregated Effects ------------------

coef std err t P>|t| 2.5 % 97.5 %

-2.0 0.002416 0.068377 0.035340 0.971809 -0.131599 0.136432

-1.0 0.016040 0.040492 0.396117 0.692018 -0.063323 0.095402

0.0 0.996981 0.030744 32.428757 0.000000 0.936724 1.057237

1.0 2.022243 0.045713 44.237531 0.000000 1.932647 2.111840

2.0 2.951405 0.063280 46.640434 0.000000 2.827378 3.075431

------------------ Additional Information ------------------

Score function: observational

Control group: never_treated

Anticipation periods: 0

/home/runner/work/doubleml-docs/doubleml-docs/doubleml-for-py/doubleml/did/did_aggregation.py:368: UserWarning: Joint confidence intervals require bootstrapping which hasn't been performed yet. Automatically applying '.aggregated_frameworks.bootstrap(method="normal", n_rep_boot=500)' with default values. For different bootstrap settings, call bootstrap() explicitly before plotting.

warnings.warn(

Aggregation Details#

The DoubleMLDIDAggregation objects include several DoubleMLFrameworks which support methods like bootstrap() or confint(). Further, the weights can be accessed via the properties

overall_aggregation_weights: weights for the overall aggregationaggregation_weights: weights for the aggregation

To clarify, e.g. for the eventstudy aggregation

[11]:

print(aggregated_eventstudy)

================== DoubleMLDIDAggregation Object ==================

Event Study Aggregation

------------------ Overall Aggregated Effects ------------------

coef std err t P>|t| 2.5 % 97.5 %

1.99021 0.038759 51.347936 0.0 1.914243 2.066177

------------------ Aggregated Effects ------------------

coef std err t P>|t| 2.5 % 97.5 %

-2.0 0.002416 0.068377 0.035340 0.971809 -0.131599 0.136432

-1.0 0.016040 0.040492 0.396117 0.692018 -0.063323 0.095402

0.0 0.996981 0.030744 32.428757 0.000000 0.936724 1.057237

1.0 2.022243 0.045713 44.237531 0.000000 1.932647 2.111840

2.0 2.951405 0.063280 46.640434 0.000000 2.827378 3.075431

------------------ Additional Information ------------------

Score function: observational

Control group: never_treated

Anticipation periods: 0

Here, the overall effect aggregation aggregates each effect with positive exposure

[12]:

print(aggregated_eventstudy.overall_aggregation_weights)

[0. 0. 0.33333333 0.33333333 0.33333333]

If one would like to consider how the aggregated effect with \(e=0\) is computed, one would have to look at the third set of weights within the aggregation_weights property

[13]:

aggregated_eventstudy.aggregation_weights[2]

[13]:

array([0.32875335, 0. , 0. , 0. , 0.32674263,

0. , 0. , 0. , 0.34450402])

Taking a look at the original dml_obj, one can see that this combines the following estimates:

\(\widehat{ATT}(2,1,2)\)

\(\widehat{ATT}(3,2,3)\)

\(\widehat{ATT}(4,3,4)\)

[14]:

print(dml_obj.summary)

coef std err t P>|t| 2.5 % 97.5 %

ATT(2.0,1,2) 0.925458 0.064120 14.433221 0.000000 0.799785 1.051131

ATT(2.0,1,3) 1.989203 0.064699 30.745484 0.000000 1.862395 2.116011

ATT(2.0,1,4) 2.951405 0.063280 46.640434 0.000000 2.827378 3.075431

ATT(3.0,1,2) -0.034680 0.066031 -0.525214 0.599434 -0.164099 0.094738

ATT(3.0,2,3) 1.113239 0.065350 17.035002 0.000000 0.985155 1.241323

ATT(3.0,2,4) 2.055487 0.065477 31.392537 0.000000 1.927155 2.183820

ATT(4.0,1,2) 0.002416 0.068377 0.035340 0.971809 -0.131599 0.136432

ATT(4.0,2,3) 0.064144 0.066283 0.967732 0.333178 -0.065768 0.194057

ATT(4.0,3,4) 0.954969 0.067381 14.172688 0.000000 0.822905 1.087033