DoubleML

Treatment Heterogeneity

Tools for Causality

Grenoble, Sept 25 - 29, 2023

Philipp Bach, Sven Klaassen

Motivation

Motivation

In many cases, treatment effects are heterogeneous across individuals

Think of the effect of a training program on wages:

- The effect is likely to be different for individuals who participate in the program and those who do not

- Usually, the goal is not to force everyone to do training programs

Heterogeneity is of interest in many applications:

- Effect of a marketing campaign on different types of customers (uplift modeling, e.g., different markets, different age groups, etc.)

- Effect of a drug on different types of patients (e.g. age groups)

Treatment Effect on the Treated

Treatment Effect on the Treated

- The Average Treatment Effect on the Treated (ATTE) is defined as the effect of the treatment for the subpopulation of individuals who actually receive the treatment

\[ \tau_{ATTE} = E[Y(1) - Y(0)| D = 1] \]

Treatment Effect on the Treated

- The ATTE for

DoubleMLIRMmodels can be estimated by adjusting thescoreparameter toscore="ATTE"

from doubleml import DoubleMLIRM

from sklearn.ensemble import RandomForestClassifier, RandomForestRegressor

dml_irm_atte = DoubleMLIRM(dml_data,

ml_g = RandomForestRegressor(),

ml_m = RandomForestClassifier(),

score="ATTE")

_ = dml_irm_atte.fit()

dml_irm_atte.summary.round(3)| coef | std err | t | P>|t| | 2.5 % | 97.5 % | |

|---|---|---|---|---|---|---|

| e401 | 10656.592 | 2419.86 | 4.404 | 0.0 | 5913.753 | 15399.431 |

GATEs

Often, we are interested in different subpopulations of individuals

Group Average Treatment Effects (GATEs) are defined as the average treatment effect for a subpopulation of individuals (defined via an indicator \(G\))

\[ \tau_{GATE} = E[Y(1) - Y(0)| G = 1] \]

- Typical applications include:

- Customer segmentation

GATEs

For

DoubleMLIRMmodels, the GATEs can be estimated based on a standard model (score="ATE") by using thegate()methodEstimation of GATEs does not require re-estimation of the model

from doubleml import DoubleMLIRM

from sklearn.ensemble import RandomForestClassifier, RandomForestRegressor

dml_irm= DoubleMLIRM(dml_data,

ml_g = RandomForestRegressor(),

ml_m = RandomForestClassifier(),

score="ATE")

_ = dml_irm.fit()

dml_irm.summary.round(3)| coef | std err | t | P>|t| | 2.5 % | 97.5 % | |

|---|---|---|---|---|---|---|

| e401 | 8919.856 | 1594.868 | 5.593 | 0.0 | 5793.972 | 12045.739 |

GATEs

- At first, we have to define groups and a corresponding pandas dataframe

| married | |

|---|---|

| 0 | 0 |

| 1 | 0 |

| 2 | 0 |

- Then, we can call the

gate()method and construct confidence intervals with theconfint()method

GATEs

- If we want to consider different groups, we could either call

gate()multiple times or define the groups in a single dataframe

groups = pd.DataFrame({'married': dml_data.data['marr'] == 1,

'not_married': dml_data.data['marr'] == 0})

groups.head(n=3)| married | not_married | |

|---|---|---|

| 0 | False | True |

| 1 | False | True |

| 2 | False | True |

- This enables valid uniform confidence intervals

GATEs

Important: For valid confidence intervals, the groups need to be mutually exclusive!

A simple and intuitive way to set up mutually exclusive groups is to use only one column to define the different groups

CATEs

- Conditional Average Treatment Effects (CATEs) are defined as the average treatment effect for a subpopulation of individuals (defined via a vector of covariates \(X\))

\[ \tau(x) = E[Y(1) - Y(0)| X = x] \]

Depending on \(X\) this can be quite complicated to estimate (e.g. if \(X = (X_1, X_2, X_3, \dots)\) includes multiple variables)

A very simple idea is to approximate \(\tau(x)\) as a linear function of \(X\) (see Semenova and Chernozhukov (2021)):

\[ \tau(x) \approx \beta_0 + \beta_1 x_1 + \beta_2 x_2 + \dots \]

- Note that the coefficients do not have a causal interpretation! This does not mean \(X\) affects \(D\) or \(D\) affects \(X\)!

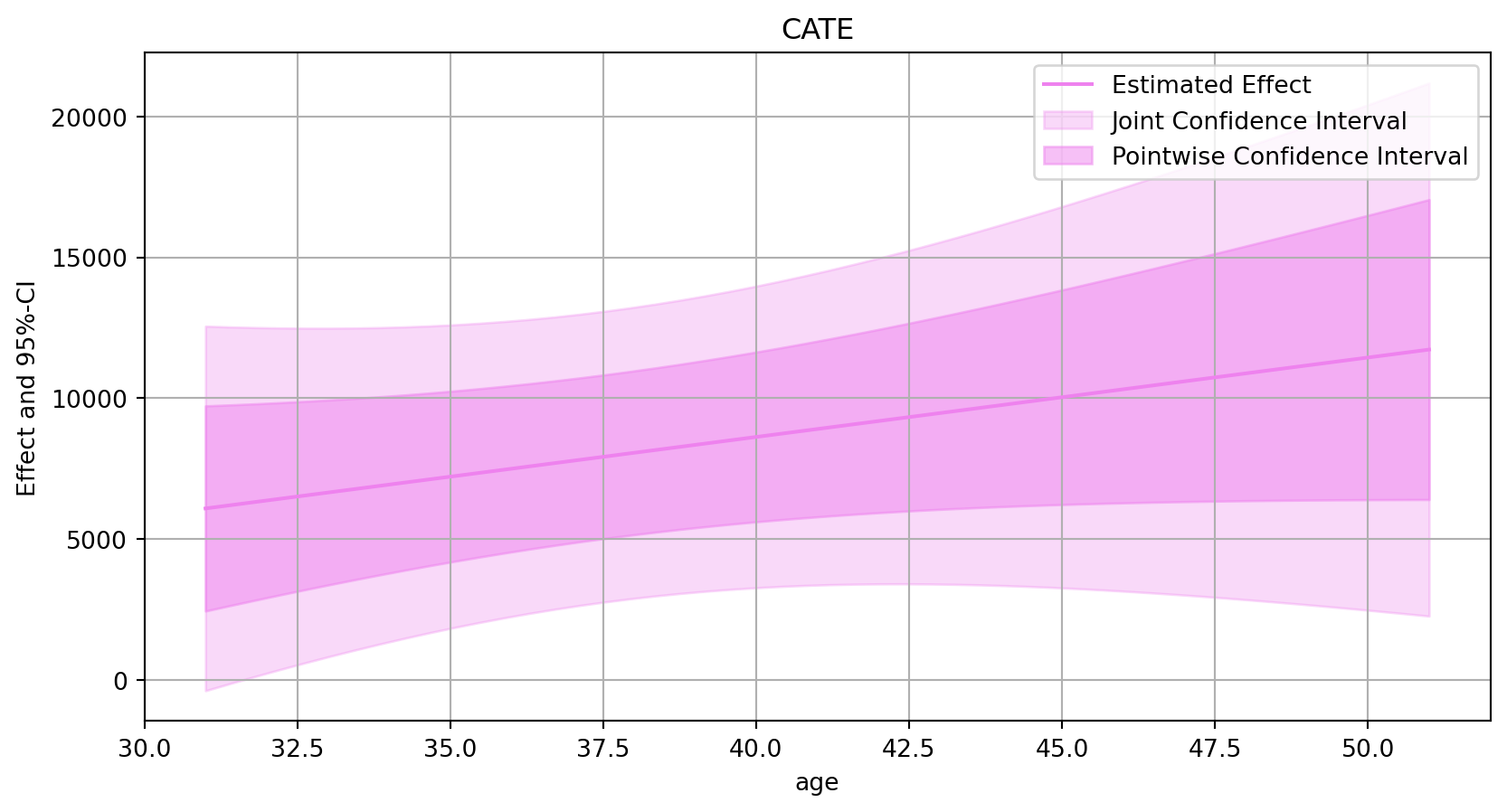

Basic CATEs

- Consider the conditional average effect of

age

age_data = dml_data.data["age"]

linear_basis = pd.DataFrame({'intercept': np.ones_like(age_data),

'age': age_data})

cate_linear = dml_irm.cate(linear_basis)

print(cate_linear)================== DoubleMLBLP Object ==================

------------------ Fit summary ------------------

coef std err t P>|t| [0.025 \

intercept -2659.518816 6528.150481 -0.407392 0.683729 -15456.021077

age 282.009614 154.172673 1.829180 0.067403 -20.200171

0.975]

intercept 10136.983446

age 584.219400 Basic CATEs

- Usually, we are not interested in the coefficients (or confidence intervals), but confidence values for new observations

new_data = {"age": np.linspace(np.quantile(age_data, 0.2), np.quantile(age_data, 0.8), 50)}

linear_grid = pd.DataFrame({"intercept": np.ones_like(new_data["age"]),

"age": new_data["age"]})

df_cate_linear = cate_linear.confint(linear_grid, level=0.95, joint=True, n_rep_boot=2000)

print(df_cate_linear.head(n=8)) 2.5 % effect 97.5 %

0 -387.913346 6082.779233 12553.471813

1 -130.121913 6197.885199 12525.892310

2 121.537870 6312.991164 12504.444457

3 366.651582 6428.097129 12489.542676

4 604.789274 6543.203094 12481.616914

5 835.508734 6658.309059 12481.109383

6 1058.359741 6773.415024 12488.470307

7 1272.889368 6888.520989 12504.152610Basic CATEs

- This can be easily visualized

Code

df_cate_linear_pointwise = cate_linear.confint(linear_grid, level=0.95, joint=False)

import matplotlib.pyplot as plt

df_cate_linear['age'] = new_data['age']

fig, ax = plt.subplots()

_ = ax.grid(visible=True)

_ = ax.plot(df_cate_linear['age'],df_cate_linear['effect'], color='violet', label='Estimated Effect')

_ = ax.fill_between(df_cate_linear['age'], df_cate_linear['2.5 %'], df_cate_linear['97.5 %'], color='violet', alpha=.3, label='Joint Confidence Interval')

_ = ax.fill_between(df_cate_linear['age'], df_cate_linear_pointwise['2.5 %'], df_cate_linear_pointwise['97.5 %'], color='violet', alpha=.5, label='Pointwise Confidence Interval')

_ = plt.legend()

_ = plt.title('CATE')

_ = plt.xlabel('age')

_ = plt.ylabel('Effect and 95%-CI')

plt.show()

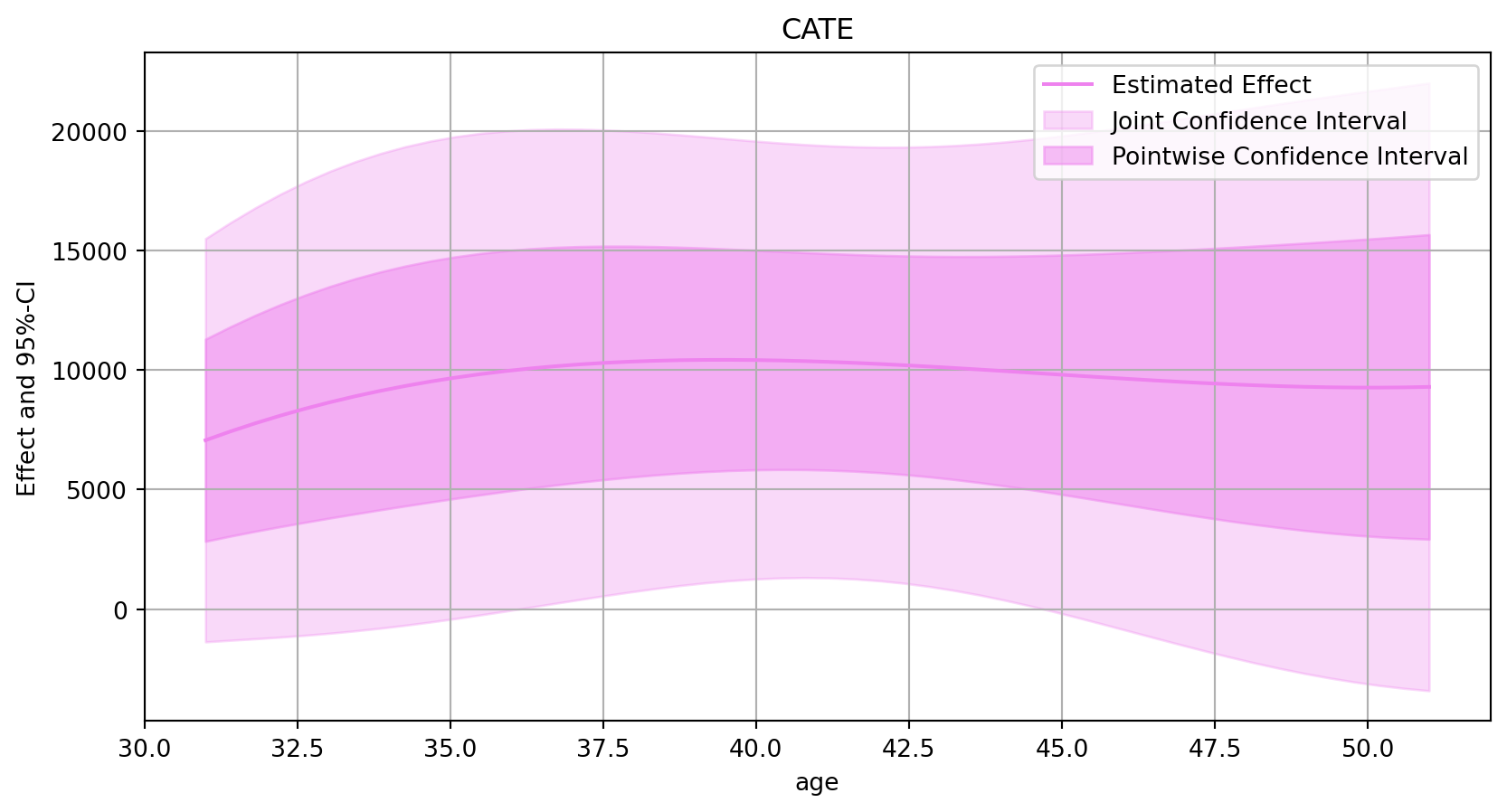

Polynomial CATE Approximations

- Since the linear approximation is quite restrictive, we can also use a more flexible approximation, e.g. polynomial

\[ \tau(x)\approx \beta_0 + \beta_1 x_1 + \beta_2 x_1^2 + \dots \]

from sklearn.preprocessing import PolynomialFeatures

# Create the polynomial features object

poly = PolynomialFeatures(degree=3)

poly_basis_array = poly.fit_transform(dml_data.data[["age"]])

poly_basis = pd.DataFrame(poly_basis_array, columns=poly.get_feature_names_out())

print(poly_basis.head()) 1 age age^2 age^3

0 1.0 47.0 2209.0 103823.0

1 1.0 36.0 1296.0 46656.0

2 1.0 37.0 1369.0 50653.0

3 1.0 58.0 3364.0 195112.0

4 1.0 32.0 1024.0 32768.0Polynomial CATE Approximations

- Now, we can estimate the CATEs based on the polynomial basis

================== DoubleMLBLP Object ==================

------------------ Fit summary ------------------

coef std err t P>|t| [0.025 \

1 -156771.428293 110574.481330 -1.417790 0.156283 -373519.899306

age 11501.004758 8119.848867 1.416406 0.156688 -4415.550361

age^2 -260.747500 191.922980 -1.358605 0.174303 -636.955572

age^3 1.942806 1.462744 1.328193 0.184145 -0.924469

0.975]

1 59977.042721

age 27417.559878

age^2 115.460573

age^3 4.810081 Polynomial CATE Approximations

- Create a regular grid of new observations

poly_grid = pd.DataFrame(poly.transform(new_data["age"].reshape(-1,1)),

columns=poly.get_feature_names_out())

df_cate_poly = cate_poly.confint(poly_grid, level=0.95, joint=True, n_rep_boot=2000)

print(df_cate_poly.head(n=8)) 2.5 % effect 97.5 %

0 -1371.784065 7059.496867 15490.777800

1 -1305.121742 7428.236489 16161.594719

2 -1240.794431 7771.091021 16782.976473

3 -1172.562904 8088.853116 17350.269136

4 -1096.322779 8382.315426 17860.953631

5 -1009.553305 8652.270602 18314.094509

6 -910.920448 8899.511296 18709.943039

7 -799.993175 9124.830159 19049.653492Polynomial CATE Approximations

- And visualize the results

Code

df_cate_poly_pointwise = cate_poly.confint(poly_grid, level=0.95, joint=False)

import matplotlib.pyplot as plt

df_cate_poly['age'] = new_data['age']

fig, ax = plt.subplots()

_ = ax.grid(visible=True)

_ = ax.plot(df_cate_poly['age'],df_cate_poly['effect'], color='violet', label='Estimated Effect')

_ = ax.fill_between(df_cate_poly['age'], df_cate_poly['2.5 %'], df_cate_poly['97.5 %'], color='violet', alpha=.3, label='Joint Confidence Interval')

_ = ax.fill_between(df_cate_poly['age'], df_cate_poly_pointwise['2.5 %'], df_cate_poly_pointwise['97.5 %'], color='violet', alpha=.5, label='Pointwise Confidence Interval')

_ = plt.legend()

_ = plt.title('CATE')

_ = plt.xlabel('age')

_ = plt.ylabel('Effect and 95%-CI')

plt.show()

Policy Learning with Trees

The

DoubleMLpackage also supports basic policy learning with trees for theDoubleMLIRMmodel (similar to Athey and Wager (2021))General reasoning: If we know that the treatment effect is positive for some observations and negative for others, why don’t we base our treatment assignment on that knowledge?

Idea: Use a classification tree to optimize over feature regions

Let \(X\) be the set of covariates for which we want to optimize the treatment assignment with a policy

\[ \pi: X \rightarrow \{0,1\} \]

Policy Learning with Trees

- The policy is then defined by minimizing the following objective function

\[ \frac{1}{n}\sum_{i=1}^n \underbrace{\left(2\pi(X_i) - 1\right)}_{\text{policy decision}} \underbrace{\psi_b(W_i, \hat{\eta})}_{\text{effect size}} = \frac{1}{n}\sum_{i=1}^n \left(2\pi(X_i) - 1\right) \underbrace{\text{sign}\left(\psi_b(W_i, \hat{\eta})\right)}_{\text{label}} \underbrace{\lvert \psi_b(W_i, \hat{\eta}) \rvert} _{\text{weight}}. \]

Policy Learning with Trees

Policy trees can be fitted via the

policy_tree()methodAs the tree is based on the scores, the learners do not have to be re-estimated

Policy Learning with Trees

Recommendation

Evaluation of learned policies should be performed on a separate test set

Shallow trees are recommended for policy learning

Policy tree implementation is an approximation; a formal framework is provided in Athey and Wager (2021)

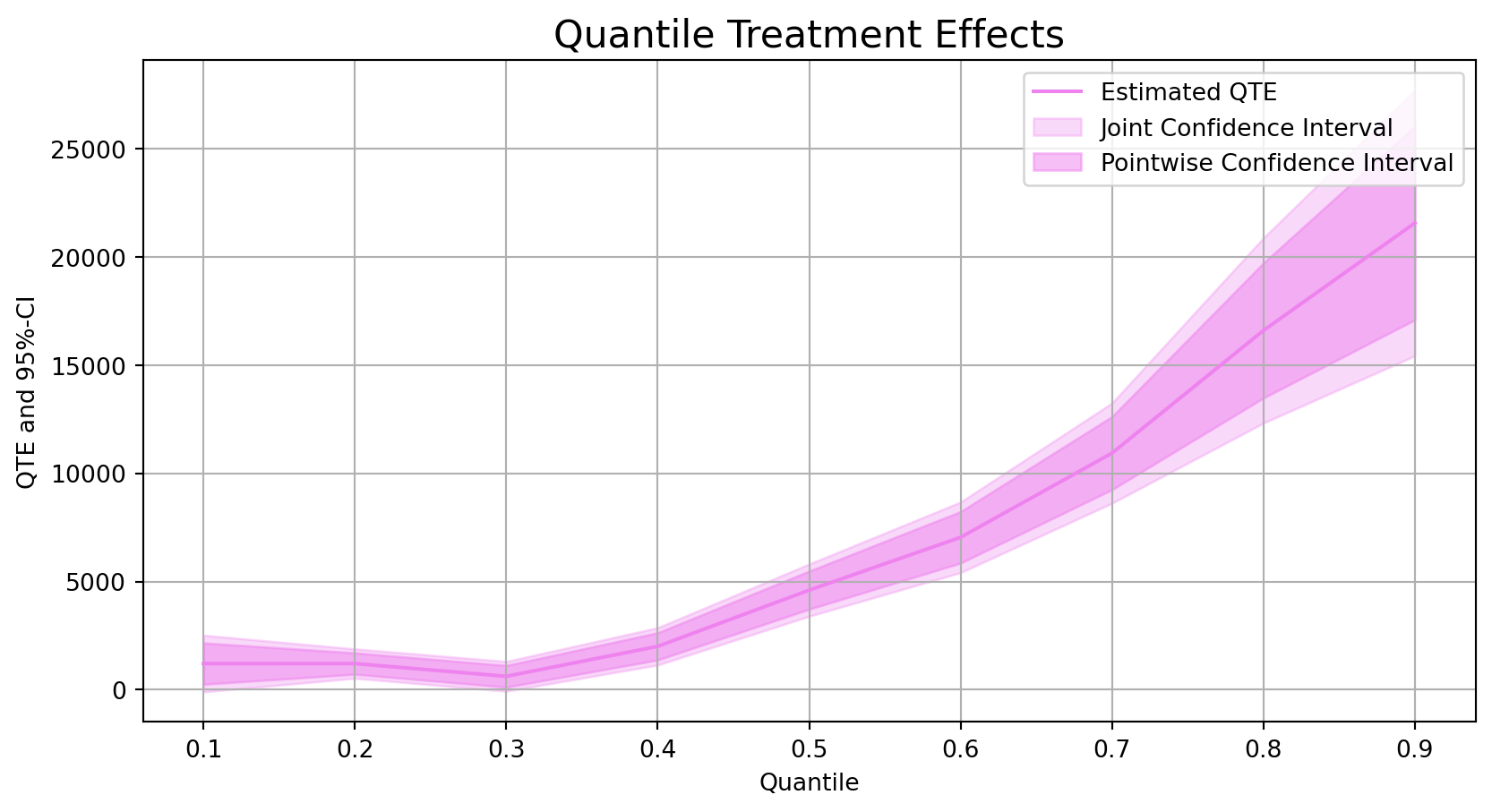

Outlook: Quantile Treatment Effects

Quantile Treatement Effects (QTEs)

- QTEs (from Kallus, Mao, and Uehara (2019)) are implemented via a separate class

from doubleml import DoubleMLQTE

from lightgbm import LGBMClassifier, LGBMRegressor

from sklearn.base import clone

tau_vec = np.arange(0.1,0.95,0.1)

n_folds = 5

# Learners

class_learner = LGBMClassifier(n_estimators=300, learning_rate=0.05, num_leaves=10)

np.random.seed(42)

dml_QTE = DoubleMLQTE(dml_data, ml_g=clone(class_learner), ml_m=clone(class_learner),

quantiles=tau_vec, score='PQ', normalize_ipw=True)

_ = dml_QTE.fit()

print(dml_QTE)[LightGBM] [Info] Number of positive: 1178, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000418 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3172, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371375 -> initscore=-0.526325

[LightGBM] [Info] Start training from score -0.526325

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000458 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000284 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000511 seconds.

You can set `force_col_wise=true` to remove the overhead.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000299 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 265, number of negative: 2228

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000237 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 2493, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.106298 -> initscore=-2.129130

[LightGBM] [Info] Start training from score -2.129130

[LightGBM] [Info] Number of positive: 2946, number of negative: 4986

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000704 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 339

[LightGBM] [Info] Number of data points in the train set: 7932, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371407 -> initscore=-0.526186

[LightGBM] [Info] Start training from score -0.526186

[LightGBM] [Info] Number of positive: 1178, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000350 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3172, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371375 -> initscore=-0.526325

[LightGBM] [Info] Start training from score -0.526325

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000277 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000339 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000277 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000371 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 266, number of negative: 2227

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000273 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 2493, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.106699 -> initscore=-2.124914

[LightGBM] [Info] Start training from score -2.124914

[LightGBM] [Info] Number of positive: 2946, number of negative: 4986

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000589 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 338

[LightGBM] [Info] Number of data points in the train set: 7932, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371407 -> initscore=-0.526186

[LightGBM] [Info] Start training from score -0.526186

[LightGBM] [Info] Number of positive: 1178, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000284 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3172, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371375 -> initscore=-0.526325

[LightGBM] [Info] Start training from score -0.526325

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000271 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000263 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000302 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000327 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 250, number of negative: 2243

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000279 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 2493, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.100281 -> initscore=-2.194109

[LightGBM] [Info] Start training from score -2.194109

[LightGBM] [Info] Number of positive: 2946, number of negative: 4986

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000563 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 339

[LightGBM] [Info] Number of data points in the train set: 7932, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371407 -> initscore=-0.526186

[LightGBM] [Info] Start training from score -0.526186

[LightGBM] [Info] Number of positive: 1178, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000343 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3172, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371375 -> initscore=-0.526325

[LightGBM] [Info] Start training from score -0.526325

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000342 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000311 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000285 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 338

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000321 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 253, number of negative: 2241

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000285 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 2494, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.101443 -> initscore=-2.181288

[LightGBM] [Info] Start training from score -2.181288

[LightGBM] [Info] Number of positive: 2945, number of negative: 4987

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000646 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 338

[LightGBM] [Info] Number of data points in the train set: 7932, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371281 -> initscore=-0.526726

[LightGBM] [Info] Start training from score -0.526726

[LightGBM] [Info] Number of positive: 1178, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000308 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3172, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371375 -> initscore=-0.526325

[LightGBM] [Info] Start training from score -0.526325

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000271 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000334 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000305 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000339 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 258, number of negative: 2236

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000220 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 2494, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.103448 -> initscore=-2.159484

[LightGBM] [Info] Start training from score -2.159484

[LightGBM] [Info] Number of positive: 2945, number of negative: 4987

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000532 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 338

[LightGBM] [Info] Number of data points in the train set: 7932, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371281 -> initscore=-0.526726

[LightGBM] [Info] Start training from score -0.526726

[LightGBM] [Info] Number of positive: 1178, number of negative: 1994

[LightGBM] [Warning] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000460 seconds.

You can set `force_col_wise=true` to remove the overhead.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3172, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371375 -> initscore=-0.526325

[LightGBM] [Info] Start training from score -0.526325

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000203 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000324 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000235 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000512 seconds.

You can set `force_col_wise=true` to remove the overhead.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 139, number of negative: 1334

[LightGBM] [Warning] Auto-choosing col-wise multi-threading, the overhead of testing was 0.000493 seconds.

You can set `force_col_wise=true` to remove the overhead.

[LightGBM] [Info] Total Bins 332

[LightGBM] [Info] Number of data points in the train set: 1473, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.094365 -> initscore=-2.261463

[LightGBM] [Info] Start training from score -2.261463

[LightGBM] [Info] Number of positive: 2946, number of negative: 4986

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000591 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 339

[LightGBM] [Info] Number of data points in the train set: 7932, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371407 -> initscore=-0.526186

[LightGBM] [Info] Start training from score -0.526186

[LightGBM] [Info] Number of positive: 1178, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000275 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3172, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371375 -> initscore=-0.526325

[LightGBM] [Info] Start training from score -0.526325

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000329 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000213 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000265 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000230 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 336

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 158, number of negative: 1315

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000249 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 332

[LightGBM] [Info] Number of data points in the train set: 1473, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.107264 -> initscore=-2.118997

[LightGBM] [Info] Start training from score -2.118997

[LightGBM] [Info] Number of positive: 2946, number of negative: 4986

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000448 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 338

[LightGBM] [Info] Number of data points in the train set: 7932, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371407 -> initscore=-0.526186

[LightGBM] [Info] Start training from score -0.526186

[LightGBM] [Info] Number of positive: 1178, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000319 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3172, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371375 -> initscore=-0.526325

[LightGBM] [Info] Start training from score -0.526325

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000259 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 335

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000287 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000269 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000301 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 121, number of negative: 1352

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000150 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 332

[LightGBM] [Info] Number of data points in the train set: 1473, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.082145 -> initscore=-2.413550

[LightGBM] [Info] Start training from score -2.413550

[LightGBM] [Info] Number of positive: 2946, number of negative: 4986

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000536 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 339

[LightGBM] [Info] Number of data points in the train set: 7932, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371407 -> initscore=-0.526186

[LightGBM] [Info] Start training from score -0.526186

[LightGBM] [Info] Number of positive: 1178, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000266 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3172, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371375 -> initscore=-0.526325

[LightGBM] [Info] Start training from score -0.526325

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000360 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1179, number of negative: 1994

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000281 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371573 -> initscore=-0.525476

[LightGBM] [Info] Start training from score -0.525476

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000263 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 338

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Info] Number of positive: 1178, number of negative: 1995

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000324 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 337

[LightGBM] [Info] Number of data points in the train set: 3173, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.371257 -> initscore=-0.526826

[LightGBM] [Info] Start training from score -0.526826

[LightGBM] [Warning] Contains only one class

[LightGBM] [Info] Number of positive: 0, number of negative: 1472

[LightGBM] [Warning] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000297 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 330

[LightGBM] [Info] Number of data points in the train set: 1472, number of used features: 9

[LightGBM] [Info] [binary:BoostFromScore]: pavg=0.000000 -> initscore=-34.538776

[LightGBM] [Info] Start training from score -34.538776

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements

[LightGBM] [Warning] Stopped training because there are no more leaves that meet the split requirements